Episode Summary:

This particular podcast covers the material from Chapter 5 of my new book “Statistical Machine Learning: A unified framework” which is now available! The book chapter shows how matrix calculus is very useful for the analysis and design of both linear and nonlinear learning machines with lots of examples.

Show Notes:

Hello everyone! Welcome to the 83nd podcast in the podcast series Learning Machines 101. In this series of podcasts my goal is to discuss important concepts of artificial intelligence and machine learning in hopefully an entertaining and educational manner.

This particular podcast is actually the sixth episode in a special series of episodes designed to provide commentary of my new book. The book’s title is “Statistical Machine Learning: A unified framework” published by CRC Press in their “Texts in Statistical Science” series. The basic idea of the book is that it is designed to provide mathematical tools from the fields of nonlinear optimization theory and mathematical statistics for the analysis and design of machine learning algorithms. If you have an undergraduate degree in computer science or engineering or math, then this book is a great way to get up-to-speed with some of the most important widely used mathematical tools in machine learning used by the top experts in machine learning. You can learn more about my new book by visiting the website: www.statisticalmachinelearning.com.

Each podcast in this special series describes a chapter from my new book. Each podcast is typically divided into four sections. The first section provides an easy-to-understand self-contained overview of the key concepts from the relevant chapter. The second section is specifically designed to provide guidance to the human student who is using the book for self-study. The third section is designed to provide guidance for the human instructor who is using the book for instruction. The fourth section is designed to provide guidance for robot web spiders (also called web spiderbots or web crawlers) so they can find this podcast and properly index it for humans. But even if you are not a robot web spider…you shouldn’t feel left out! Humans familiar with the relevant terminology will also find this fourth section of the podcast useful for a quick technical overview of what is covered in the book chapter.

The main focus of this particular episode covers the material in Chapter 5 of my new forthcoming book titled “Statistical Machine Learning: A unified framework.” Chapter 5 is titled “Matrix Calculus for Machine Learning”.

[music interlude]

Before beginning out discussion, it would be helpful to review the previous podcast which is Episode 82 of Learning Machines 101 which covers vectors and matrices if you are not familiar with vectors and matrices.

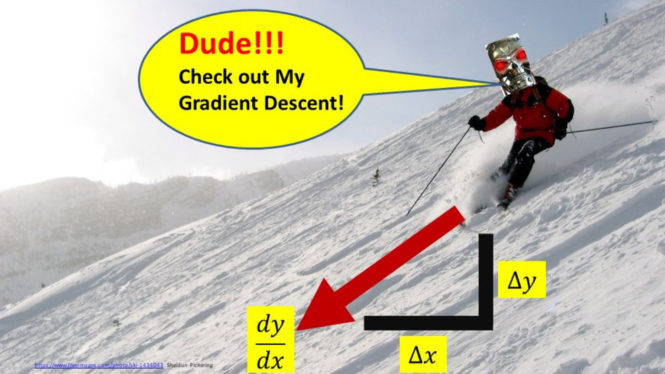

An important key idea for many machine learning algorithms is the concept of “gradient descent”. The basic idea is that suppose we have a machine learning algorithm whose performance can be measured in terms of some performance function. An example of a performance function might be the prediction error of the learning machine. The learning machine’s prediction error depends upon the training stimuli that we used to train the learning machine but it also depends upon the parameters of the learning machine. As previously noted, the “parameters” of the learning machine are adjusted during the learning process with the goal of improving the learning machine’s performance. Note that the prediction error of the learning machine is essentially a function of its parameter values. If the learning machine has a good set of parameter values, then it will have a low prediction error. If the learning machine has a bad set of parameter values, then it will have a high prediction error.

Now this is where the concept of “gradient descent” comes into play. It turns out that one can prove the following important theorem. Suppose that we make a very small perturbation to all of the learning machine’s parameters. Specifically, the perturbation added to a particular parameter value is defined as some small negative number multiplied by the derivative of the prediction error with respect to that parameter value. It can be shown that for a large class of prediction error functions that this method of updating the parameters has the property that the prediction error for the updated parameter values will always be smaller than the prediction error for the original parameter values. This is called the method of gradient descent. This method of updating the parameters of a learning machine so that it’s prediction error performance is improved is a key idea for many machine learning algorithms. The concept of gradient descent was discussed in Episode 65 of Learning Machines 101 (www.learningmachines101.com).

In Episode 23 of Learning Machines 101 (www.learningmachines101.com) we discussed the concept of deep learning neural networks. In a feedforward deep learning neural network, a unit is essentially a function which computes a state value from its parameter values and the states of the units to which it is connected. Each state value is represented as a number. Intuitively, the state of a unit is analogous to the degree of activity of a neuron and the parameters specify the degree to which the activity of one neuron influences another.

Of course, the next challenge is methods for computing the derivatives to implement the gradient descent algorithm. In many machine learning applications, the prediction error is a very complicated function of the parameter values so its not immediately obvious how to compute the necessary component derivatives of the vector derivative. In fact, even computing just one of these derivatives would seem to be extremely challenging yet we often need to compute many such derivatives. We can address this problem by using an important principle which I will call “function decomposition”.

In a feedforward deep learning neural network, the output unit layer is a set of output units which are functions of parameters and the states of a collection of hidden units. Those hidden unit states are, in turn, functions of additional parameters and the states of another set of hidden units and so on. Eventually the final set of hidden units is a function of parameters and the input units which are used to specify the numerical states of an input pattern.

In the deep learning neural network situation we are expressing a very complicated function which is the prediction error as the composition of a bunch of simpler functions. The discrepancy function which measures the difference between the predicted output and the desired response is a function of the predicted output function. The predicted output function is a function of the output unit connection parameters connecting the hidden units to the output units and the hidden unit functions. The hidden unit functions are a function from the hidden unit connecting parameters connecting the input units to the hidden units and the input pattern vector.

To understand the basic concept of computing derivatives in this type of network, consider the overly simplistic case where we have an output unit connected by an output weight parameter to a hidden unit which is connected by a hidden unit weight parameter to an input unit. The derivative of the prediction error with respect to the hidden unit weight is then computed using the chain rule by multiplying the derivative of the prediction error with respect to the output unit state by the derivative of the output unit state with respect to the hidden unit state and then multiplying that by the derivative of the hidden unit state with respect to the hidden unit weight. Thus, we have a simple algorithm for computing the derivative of the prediction error with respect to a connection weight but the problem is that we have assumed that each layer of units in the network has only one free parameter.

The good news is that we can generalize these basic ideas in a natural way by using matrix calculus. In matrix calculus, when we have a function of many parameters we simply take the derivative of the function with respect to each parameter and arrange all of these derivatives in a list which is called a gradient row vector. So the derivative of a function with respect to parameter vector is a row vector. In matrix calculus, we also will need to take the derivative of a list of functions which is called a vector of functions where each function in the list is a function of the same parameter vector. This derivative turns out to be a matrix called the Jacobian. The first row of the matrix is the gradient row vector for the first function in the list, the second row of the matrix is the gradient row vector for the second function in the list and so on.

With these tools, we can now introduce a generalization of the standard chain rule which is called the matrix chain rule for the situation where we have a layer of output units connected to a layer of hidden units and then a layer of hidden units connected to a layer of input units. We can compute the derivative of the prediction error of this network by first computing the vector derivative of the prediction error with respect to the output unit states, then we compute the Jacobian matrix derivative of the output unit states to the hidden unit states, then we compute the Jacobian matrix derivative of the hidden unit states to the hidden unit connection weight parameters. We multiply the vector derivative of the prediction error by the output unit Jacobian matrix, multiply that by the hidden unit Jacobian matrix, and then multiply that by the hidden unit state to hidden unit weight Jacobian matrix to obtain the derivative of the prediction error with respect to the hidden unit weights! Piece of cake! Well its maybe slightly trickier than I described but you get the general idea!

From a software implementation perspective, we have an object called a “layer object” which specifies how the input unit states to the layer are transformed by a set of parameters to generate the output states. The “layer object” also has a “Transfer Jacobian Matrix” which specifies the formulas for the derivative of the output states of the layer with respect to the input states of the layer. The layer object also has another “Connection Weight Jacobian Matrix” which specifies the formulas for the derivative of the output states of the layer with respect to its connection weights. Many deep neural network learning software packages are based upon these general principles. We construct these layer objects which are modular and self-contained and then we can “tinker-toy” them together to form crazy complicated deep learning neural networks which learn by gradient descent. The necessary derivatives are computed by simply multiplying the Jacobians for each layer together in the appropriate order.

Note that if the derivative of the prediction error with respect to the parameters is equal to a vector of zeros, then this means that the parameters are not updated. When the prediction error derivative with respect to the parameters is a vector of zeros, this is called a “critical point”. You might have learned about critical points in your first calculus class. It is the point where the slope of the function you are trying to minimize is equal to zero. If the function has a minimizer at a particular point then the slope will be zero at that point. The problem is that a complicated function can have multiple minimizers and some minimizers are “deeper” than others.

To fix ideas, imagine a landscape of mountains where the height of a point on a mountain side corresponds to the prediction error of a learning machine. Assume the learning machine has two parameters which specify an individual’s location on the earth where one parameter specifies the latitude (or east-west direction) and one parameter specifies the longitude (or north-south direction). A gradient descent algorithm starts at a random latitude and longitude and then makes a step in a “downwards direction” on the mountain. The goal is to minimize the prediction error and reach sea level using this strategy. The problem, as you can see, is that you might pursue this strategy and end up in small valley nested in the mountains which is hundreds of feet above sea level. In fact, you could also end up on a mountain plateau (also called a saddle point) which is a flat region located in the mountains.

In your introductory calculus course, you probably learned about how to use calculus to check for a local minimum. Basically you check if the first derivative is zero and that the second derivative is positive at a point to determine if you are at a local minimum. It turns out that these ideas can be extended using matrix calculus as well so this will allow us to investigate where we have ended up as a result of the gradient descent learning algorithm and help us to determine whether we should stop or perhaps continue our explorations of the prediction error mountain side in a different way!!!

Finally, in your introductory calculus course you probably learned about Taylor series. The basic idea of a Taylor series is that we can take an arbitrary sufficient smooth function and then over small interval approximate that function by a polynomial such as a quadratic formula. The coefficients of the polynomial are computed using derivatives. Using matrix calculus, we can develop a Taylor series for a function of a high-dimensional parameter vector. The resulting polynomial is more complicated in the multidimensional case and the coefficients are computed using gradient derivative vectors and the second derivative of a function of a parameter vector will turn out to be a matrix.

It turns out such Taylor series approximations are very powerful tools for the analysis and design of not only deep learning neural networks but a very large class of linear and nonlinear learning algorithms. Briefly, when we are designing a learning algorithm we are trying to figure out how to make a small perturbation to the parameters of the learning machine to decrease the value of a highly nonlinear performance measure such as prediction error. A common strategy is to use the Taylor series approximation to approximate the highly nonlinear function in the vicinity of the current guess of the parameter values as either a linear polynomial or a quadratic polynomial. Given this approximation, effective methods for updating the parameter values can be developed since minimizing a linear function or a quadratic function is much easier than minimizing a highly nonlinear function.

Another important application for Taylor series approximations in machine learning is the analysis of generalization performance. Suppose we find parameter values which minimize the prediction error based upon a set of training data. These parameter values presumably will be “close” to the parameter values which minimize the prediction error if we have an infinite amount of training data. If we approximate the prediction error as a function of the parameter values as a quadratic polynomial, it turns out we can get estimates of how close our estimated parameter values and our estimated model fit are to the parameter values and model fit we would obtain on an infinite amount of test data.

So you can see, Taylor Series Approximations using Matrix Calculus are very useful for analyzing and designing machine learning algorithms!!!

I have provided references to several advanced books on Matrix Calculus as well as some classic Matrix Calculus papers in the show notes of this episode at: www.learningmachines101.com . I have also included a paper on “automatic differentiation” which discusses computational implementational issues associated with developing software for automatic application of the matrix chain rule.

[MUSICAL INTERLUDE]

This section of the podcast is designed to provide guidance for the student whose goal is to read Chapter 5. The first section of Chapter 5 is Section 5.1.1 which introduces concepts of deterministic convergence. This section is important because if we want to take about matrix derivatives or convergence of learning algorithms we need to understand what it means when we say a sequence of vectors converges to a vector or when we say a sequence of vectors converges to a particular set of vectors. The concept of a “closed set” is important for a variety of reasons but, in this context, a “closed set” has the property that every convergent sequence of points in a closed set converges to a point which is in the closed set. The Big O and Little O notation are helpful for talking about the order of magnitude of different convergence rates. Don’t try to memorize the definitions in this section but read each definition and try to understand the examples.

In Section 5.1.2, the concept of continuous functions are discussed. Continuous functions are important for a variety of reasons. First, gradient descent requires that we take derivatives and derivatives will not exist if the function is not continuous. Second, suppose that we can show the performance measure for our learning algorithm is decreasing as the parameters are updated. It turns out it is much easier to show that the parameters are converging to a set of parameter values which minimize the performance measure if the performance measure is a continuous function of the parameter values. Third, if the predictions of the learning machine are continuous functions of the parameter values and the inputs, this is usually a desirable feature for generalization and robust performance since it means that small perturbations to the parameter values or inputs won’t change the predictions in a dramatic manner. So section 5.2 provides the mathematical theory we need for working with continuous functions. Again, don’t worry about memorizing this section, just read it and come back to it for reference as needed.

Section 5.2 introduces matrix calculus. That is matrix derivatives, the matrix chain rule and other helpful versions of the matrix chain rule. These sections have lots and lots of examples relevant to the machine learning literature to show how these theorems are actually useful in deriving gradient descent algorithms for machine learning algorithms. There is an emphasis on Shallow and Deep Learning gradient descent algorithms. The best way to learn this material is to work through the example. Read the problem and solution of an example analysis of a machine learning algorithm. Then cover up the solution and see if you can work out the solution to the problem without cheating and looking at the solution provided in the book. Also if some of the steps are missing in the book, try to fill in the missing steps.

Section 5.3.1,5.3.2,5.3.3 introduces the Taylor Series for Matrix Calculus. After introducing the Taylor series, it is used to explain why gradient descent decreases the objective function and how to design stopping criteria for the gradient descent algorithm. Also methods for designing checks for determining if a critical point is a local or global minimizer are provided. The first time you look at this material, you might want to read this section from the perspective of an engineer trying to analyze and design a gradient descent algorithm and design stopping criteria for the algorithm. Then, the second time around, you should look closely at the conditions required for the various theorems to hold.

Section 5.3.4 introduces Lagrange Multipliers and shows how they are very useful for minimizing functions when we have constraints on the parameter space. It is also shown that Lagrange multiplier methods can be used to derive a variety of important learning algorithms such as principal component analysis, deep learning, and support vector machines. I would recommend emphasizing the examples in this section and once you have become comfortable working through the examples, then take a look at the theorems and the conditions for this to hold.

[MUSICAL INTERLUDE]

This section of the podcast is designed to provide guidance to the instructor whose goal is to teach Chapter 5. Remember these notes are available for download by simply visiting this episode on the website: www.learningmachines101.com. In addition, supplemental teaching materials for the book (as they become available) will be available on the website: www.statisticalmachinelearning.com.

So Chapter 5 is a very long chapter with lots of important information. Still, the focus of the teaching should be on helping the students understand why matrix calculus is relevant and developing skills to do matrix calculus calculations. Once this is achieved, then the technical conditions which are very important can be emphasized.

In Section 5.1.1, I would start by talking to students about why it is important to understand the concept of convergent vector sequence. Remind students that during the learning process, we basically have a sequence of parameter vectors and we are typically interested in whether this sequence will converge to a particular type of vector or even converge to a particular set of vectors. I would emphasize that closed sets are important because they contain all of their limit points. Big O and Little O notation can be discussed in terms of characterizing order of magnitude convergence rates.

In Section 5.1.2, I would start by talking to students about why continuous functions are important. In addition to reviewing the formal definition make sure you show them how to check if a function is continuous by exploiting results such as Theorem 5.1.7 which note that compositions of continuous functions are continuous and so on.

In Sections 5.2.1,5.2.2, 5.2.3 matrix derivatives are covered. Start with telling students the first derivative of function of parameters is a vector of functions and its second derivative is a matrix of functions. Emphasize that a sufficient but not necessary condition for a vector derivative to exist is that all of the partials are continuous.

When you cover the matrix chain rule, emphasize that a key strategy for computing derivatives of complicated functions is to attempt to rewrite the complicated function as a composition of many simple functions. Although there are lots of useful differentiation theorems in Sections 5.2.2 and 5.2.3, I would focus on Theorem 5.2.6 (scalar-vector product derivative rule), Theorem 5.2.7 (Dot Product Derivative Rule) and the list of short useful derivatives in Section 5.2.3.

After providing the students with the concept of the matrix chain rule and 2 or 3 differentiation rules as well as some useful derivatives, then it is helpful to work out derivatives for some linear models. To help motivate the students, I like to emphasize that these derivatives are directly useful for deriving gradient descent algorithms for these linear type networks. Example 5.3.2 discusses deriving a linear regression gradient descent algorithm, Example 5.3.1 discusses deriving a gradient descent algorithm for Hebbian learning, Exercise 5.3.1 discusses deriving a gradient descent algorithm for logistic regression, and Exercise 5.3.3 discusses deriving a gradient descent algorithm for multinomial logit regression. Example 5.2.17 and Algorithm 5.2.1 extend these ideas to shallow and deep learning networks.

Section 5.3.3 introduces the multivariate Taylor series. I would recommend first doing the scalar Taylor series and make sure everybody understands how that works. Then present the multivariate version. Then you can show the relevance of the Taylor Series by explain why gradient descent works using this expansion and show how to use the expansion to check for a strict local minimizer. Make sure to introduce the concept of a convex function and why that is importance since any strict local minimizer of a convex function is the unique global minimizer. Also note that a smooth convex function may be defined as a function whose Hessian is positive semidefinite on a convex set. Show how this works in practice by working through Example5.3.9.

Section 5.3.4 discusses Lagrange Multipliers. Spend some time explaining the goal of the Lagrange Multiplier Theorem in terms of Figure 5.4. Explain the concept of a Lagrangian. The cross-entropy minimization Example 5.3.10 is a good introductory example of how this works. Then when the scalar case is clearly understood, present some of the high-dimensional examples such as the Principal Components Analysis derivation in Example 5.3.11 and the Gradient Descent for Shallow networks example 5.3.15.

[MUSICAL INTERLUDE]

This section is designed mainly for search engines and web spider robots but humans will find this final section relevant also!!

The objective of Chapter 5 is to introduce important matrix calculus concepts and tools for supporting machine learning algorithm and design. The chapter begins with a review of relevant elementary real analysis concepts. Convergence of sequences of high-dimensional vectors and properties of continuous functions are discussed. Matrix calculus identities are then derived using the vector and matrix chain rules. These matrix calculus identities are then used to derive gradient descent algorithms for linear regression, logistic regression, softmax regression, shallow nets (also known as perceptrons), and deep learning. The vector Taylor series expansion is then introduced. The Taylor series expansion method is used to show that a gradient descent algorithm that minimizes an objective function modifies the system state vector such that the value of the objective function will decrease at each algorithm iteration provided the stepsize is sufficiently small. Next, explicit criteria for determining if a learning algorithm has converged to a critical point, a local minimizer, or a global minimizer are provided. Finally, the Lagrange Multiplier Theorem is used to derive algorithms implementing: principal components analysis (PCA) unsupervised learning algorithm, optimal linear recoding transformations, the soft margin support vector machine, multilayer perceptron gradient descent, and recurrent neural network gradient descent.

[MUSICAL INTERLUDE]

My new book “Statistical Machine Learning” can be purchased right now.

The physical book turned out really great! I have also checked out the Kindle version of the book and the CRC version of the book and they seem comparable in capabilities. The equations and figures are showing up properly in the Kindle and CRC Ebook versions. So just wanted to let you know!!! A Google Play Book version of the book is also available.

You can purchase the book at a 20% discount (but I don’t know how long this discount will last) on the CRC publisher’s website which can be reached by visiting the website: www.statisticalmachinelearning.com. Make sure to join the Learning Machines 101 community in order to be updated when and how book discounts will be available.

If you are not a member of the Learning Machines 101 community, you can join the community by visiting our website at: www.learningmachines101.com and you will have the opportunity to update your user profile at that time. This will allow me to keep in touch with you and keep you posted on the release of new podcasts and book discounts. You can update your user profile when you receive the email newsletter by simply clicking on the: “Let us know what you want to hear” link!

In addition, I encourage you to join the Statistical Machine Learning Forum on LINKED-IN. When the book is available, I will be posting information about the book as well as information about book discounts in that forum as well.

And don’t forget to follow us on TWITTER. The twitter handle for Learning Machines 101 is “lm101talk”! Also please visit us on Apple Podcast or Stitcher and leave a review. You can do this by going to the website: www.learningmachines101.com and then clicking on the Apple Podcast or Google Podcast or Stitcher.com. This will be very helpful to this podcast! Thank you again so much for listening to this podcast and participating as a member of the Learning Machines 101 community!

And finally…please take care of yourselves and your neighbors in this difficult time by practicing social distancing. In fact, if you want to make a donation to the World Health Organization, you can find a hyperlink to help you do this on the main page of the learning machines 101 website.

Take Care and See you soon!!

REFERENCES:

Golden, R. M. (2020). Statistical Machine Learning: A Unified Framework.

CRC Press. (Chapter 5).

Hoffmann, P. H. W. 2016, Jul. A hitchhiker’s guide to automatic differentiation.Numerical

Algorithms 72 (3), 775–811.

Magnus, J. R. (2010). On the concept of matrix derivative. Journal of Multivariate Analysis, 10, 2200-2206.

Marlow, W. H. (2012). Mathematics for Operations Research. New York: Dover.

Related Learning Machines 101 Podcasts:

Episode LM101-065: How to Design Gradient Descent Learning Machines

Episode LM101-082: How to Analyze and Design Linear MachinesEpisode LM101-023: How to Build a Deep Learning Machine (Function Approximation)