Podcast: Play in new window | Download | Embed

Episode Summary:

In this episode, we briefly review the concept of the Turing Test for Artificial Intelligence (AI) which states that if a computer’s behavior is indistinguishable from that of the behavior of a thinking human being, then the computer should be called “artificially intelligent”. Some objections to this definition of artificial intelligence are introduced and discussed. At the end of this episode we discuss the distinction between the concepts of Strong AI and Weak AI.

Show Notes:

Hello everyone! Welcome to the sixth podcast in the podcast series Learning Machines 101. In this series of podcasts my goal is to discuss important concepts of artificial intelligence and machine learning in hopefully an entertaining and educational manner. In Episode 5, we introduces the Turing Test. The basic idea of the Turing Test is that if a human examiner could not distinguish the observable behavior of the computer from the observable behavior of a thinking human being, then this would provide a practical test for determining if the computer was thinking. In this episode, we will discuss various objections to the Turing Test.

I will begin by briefly reviewing the description of the Turing Test which Alan Turing proposed in his original 1950 paper and which I discussed in additional detail in Episode 5.

Here is an overview of the original description of Turing’s Test as stated in the paper published in 1950. Turing, at the time, referred to the test as the “Imitation Game”. A human examiner generates questions which are presented to two different human beings who are called the game participants. One participant is a man while the other participant is a woman. The examiner, however, does not interact with the participants in a direct manner. For example, we can imagine the examiner sends and receives text messages to the participants. The examiner generates questions and receives answers from participants X and Y and has no idea whether X is the male and Y is the female or whether X is the female and Y is the male. One of the participants in the game, however, tries to generate responses to the questions intended to trick the examiner so that the examiner will incorrectly decide which participant is male and which participant is female.

Now the second part of the Imitation Game involved replacing one of the participants in the game with a computer. The computer now has the job of convincing the examiner that it is a human being of a particular gender. If the examiner’s performance on this task is comparable to the examiner’s performance when the game is played with two human participants, then the computer wins the Imitation Game. Today, we refer to the Imitation Game as the “Turing Test”.

Turing emphasized that the Turing Test should be constructed in such a way that the examiner would not know whether a computer or human being was being examined. So, in Turing’s 1950’s formulation, the examiner would type questions into a teletypewriter and then either a computer or a human being would respond. Another feature of the Turing Test that Turing emphasized was that the examiner should be allowed to ask any possible question to the computer or human being.

As previously mentioned, the Turing Test states that if a human examiner can not distinguish the observable behavior of the computer from the observable behavior of a thinking human being, then this would provide a practical test for determining if the computer was thinking.

Let’s now discuss six different objections to the Turing Test.

The first objection is called the “Consciousness Argument” which basically says that even if a computer could compose a beautiful song or participate in a lively conversation this doesn’t mean a thing if the composition of the song or the participation in the lively conversation is not due to the internal thoughts and internal emotions of the machine. If the beautiful song which is produced or the lively conversation which is produced is just a result of applying a large collection of IF-THEN logical rules then this is not true intelligence. Building a machine that behaves in an intelligent manner doesn’t qualify the machine as intelligent because it does not have “consciousness”. The consciousness argument is similar to the “soul argument”. Building a machine that behaves in an intelligent manner doesn’t qualify the machine as intelligent because it does not have “soul”.

The problem with the consciousness or soul objection is that it is difficult to see how empirical evidence could be collected to support or refute the hypothesis that a machine is writing a sonnet or composing a concerto because of thoughts and emotions felt rather than because of symbol manipulation. The artificial intelligence researcher Marvin Minsky has taken this one-step further and argued that we can not apply this definition to human beings either. In fact, Marvin Minsky argues that human biological machines have an illusion of free will. We delude ourselves into thinking that we have free will when really we are just machines. Free will is simply an epiphenomenon.

I also want to note that the idea that humans are simply biological machines might at first appear to be morally objectionable. One could argue that a human criminal who commits horrible criminal acts is not responsible for his or her actions because the human criminal is just a machine whose behavior is pre-determined. That is, one might argue that the human criminal does not have free will or consciousness. I want to emphasize that scientists who agree with the idea that human biological machines simply have an “illusion of free will” are not necessarily in favor of eliminating criminal justice systems throughout the world!!

In science, one begins by postulating a simplified abstraction of reality which is experimentally testable. We don’t want to begin by including things in our theory such as “souls” or “consciousness” which are difficult to assess using reliable and valid measures. These are presumably high-level emergent phenomena. We construct our theories of human behavior without including notions of “souls” and “consciousness” and “free will” and explore when those theories begin to break down and when it is necessary to include such concepts to account for relevant behavioral data. So, for example, the concept of “free will” is typically avoided in the development of theories of human behavior in the field of experimental psychology but the concept of “free will” is fundamentally important in the development of theories of human behavior relating to the fields of law and moral philosophy.

The second argument against the Turing Test was proposed by Lady Lovelace, an English mathematician and co-developer of a programmable mechanical computer which was partially developed 100 years before Turing published his 1950 paper. Lady Lovelace argued that computers can not be creative. They are only capable of doing what they are programmed to do. If we don’t know how to do a task, then we can’t program the computer to do the task.

Turing in his 1950 paper makes at least two points to refute the objection of Lady Lovelace. First, he notes that one could program a computer to learn from experience and thus it could acquire knowledge that it’s programmers did not have. Second, Turing correctly appreciates the idea that although one can explicitly specify a large complex system with many simple components, the interactions of these large numbers of simple components may give rise to unexpected emergent phenomena. Turing writes: “This is the assumption that as soon as a fact is presented to a mind all consequences of that fact spring into the mind simultaneously with it. It is a very useful assumptions under many circumstances, but one too easily forgets that it is false.”

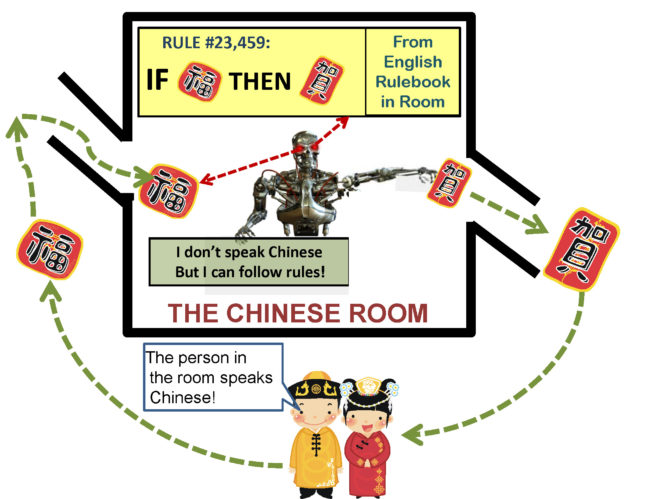

The third argument against the Turing Test is called the Chinese Room Problem. In the 1980s, the philosopher John Searle described a variation of the following scenario which is called the Chinese Room Problem. Suppose we have a room and there is a person in the room. We write a question in Chinese on a piece of paper and slip it under the door. We can write any question on the piece of paper that we like. For example, we might ask: “What is your favorite hobby?”. We then wait for a few minutes, and eventually a piece of paper is found that is sticking out out from under the bottom of the door. Presumably the person in the room presumably has provided us with a message we are supposed to read. We unfold the piece of paper and we see that the piece of paper has a message written in Chinese. We translate the message and it says “My favorite hobby is racquetball. Racquetball is an awesome sport!”. We then write a followup question such as “Why is it awesome?” (again we write the question in Chinese). Again we wait a few minutes and a piece of paper is found that is sticking out from under the bottom of the door. We translate the message and it says “It’s just fun to hit the racquetball at 100 miles per hour! I don’t really know why I think it is awesome but the rush is exhilarating! I love the power, speed, and pace of the game!” After similar interchanges, we conclude on the basis of our observations that the person in the room must actually speak and understand Chinese and also likes to play racquetball. However, when we open the door to the room, we find the person is in a room with a large number of filing cabinets which contain tens of thousands of Chinese symbols. The person does not speak Chinese and only speaks English. There is also a gigantic instruction manual written in English located in the room which explains how to assemble a response by linking together Chinese symbols given an observed sequence of Chinese symbols. The person in the room is just a technician who is manipulating symbols in a mindless manner according to the rules of the instruction manual in the room. One version of the Chinese room argument is that according to the Turing Test, the person in the room appears to understand and speak Chinese but in reality the person in the room has no understanding of Chinese. Therefore, the Turing Test which is based solely upon behavioral characteristics is an inadequate assessment of intelligence.

A major objection to the Chinese Room Argument is based upon the following logic. Although it is true the person in the room has no understanding of Chinese, the question is really whether the “Chinese Room System” understands Chinese. Specifically, the “Chinese Room System” consists of at least 5 components including: (1) the room, (2) the person, (3) the English instruction manual, (4) the filing cabinets filled with Chinese symbols, and (5) the paper and pen used by the person in the room. Opponents of the Chinese Room argument argue that the “Chinese Room System” is what understands Chinese not the person. This argument is sometimes called the “Systems Interpretation”.

The fourth objection to the Turing Test is called the “Turing-Computable Argument” which is based upon ideas that were introduced in Episode 4 of this podcast series. This objection is based upon the discussion of Gödel’s Theorem and Turing Machines. The objection can be paraphrased to state that every existing computer as well as every computer which could be constructed in the distant future will not be able to compute the solution to certain problems. This is a fundamental “disability” which is shared by all computers. Thus, it is not possible for a computer to pass the Turing Test.

Turing’s response in his 1950 paper to this objection was that although these computational limitations of computers do exist, it is not clear that a human being wouldn’t have the same limitations as a Turing Machine! Computers that can be represented as Turing Machines are not perfect and can not solve every possible problem but human beings are not perfect and might have similar computational limitations.

The fifth argument against the validity of the Turing Test which is closely related to the “Turing-Computable Argument” is the Biological Argument. The essential idea is that Biology is fundamentally important to Intelligence. Given this assumption, one then argues that Computers or more generally Turing Machines are logic machines. There may exist computations that a biological Brain computer can accomplish which can not be accomplished by a Turing machine. This idea is explored in detail in Episode 4. If so, then the Turing Test will never be effective. Super-Turing machines capable of hypercomputations are required to solve the kinds of computational problems that a biological brain can solve.

An objection to the Biological argument is that suppose we replace each neuron in a person’s brain with an extremely detailed computer simulation of a neuron where the inputs and outputs of the computer simulation are directly wired into the person’s biological system. Suppose we continue this process and eventually replace all of the neurons in the person’s brain with such simulated neurons. Now, the person’s brain has been effectively replaced with a simulated brain. Thus, according to this argument, one has now replaced the person’s brain with a simulated brain which has exactly the same input-output functions of the original biological brain. This simulated brain can then be represented as a Turing machine. Turing, in fact, used this argument in his 1950 paper to argue for the plausibility of one day constructing digital electronic computers that could think like human beings.

A response to the Biological Argument objection is that this counter-argument misses the point because it makes the assumption that the behavior of a biological neuron can be simulated on a computer as accurately as desired. This is basically an argument that although the idea that it is possible to replace someone’s brain or even nervous system with digital electronic technology seems like it might be plausible in the distant future, it might actually be totally impossible due to the fact that the simulated neurons in the simulated brain are not adequate approximations of true biological neurons.

The sixth argument against the Turing Test based upon the simulated brain thought experiment has been argued by Hubert Dreyfus. Dreyfus argues that no one believes that a computer program which simulates the behavior of an automobile is going to transport us from one location to another…so why should we believe that a simulation of the behavior of thinking is equivalent to thinking?

At one level this argument raised by Dreyfus seems to be essentially the same as the Consciousness Argument but there is a deeper interpretation of the Dreyfus simulated brain argument which is that you can not have a system that can pass the Turing Test unless it has a physical human-like body. This is necessary so that the system can have the same experiences and the same kinds of interactions with the physical world as a human being. In other words, it is not enough to replace every neuron in the nervous system, every biological cell in the human being needs to be replaced with a computer simulation of each biological cell and the entire system must be embodied as a physical robot or android that looks, operates, and learns like humans. This is essentially the important concept of “embodied cognition” and will be discussed in a future episode in greater detail. In my opinion, this is the only objection to the Turing Test which is a serious effective objection.

This discussion leads to two key concepts. The concept of “Strong Artificial Intelligence” or “Strong AI” and the concept of “Weak Artificial Intelligence” or “Weak AI”.

Strong AI means building a machine that can pass the Turing Test and mimic human behavior in every manner. Some researchers have also included in the definition of Strong AI the notion that a system with Strong AI should be considered to have a mind and consciousness. The android “DATA” on Star Trek the Next Generation is an example of Strong AI. It is unclear whether the construction of an android such as DATA is inevitable, possible, or impossible. So the issue of Strong AI remains controversial. Some leading researchers in the field of AI believe that Strong AI is quite possible while others do not. Most leading researchers in the field of AI would agree, however, that (if the implementation of DATA the android was possible) the development of the technology required to implement “DATA” is many many years in the distant future.

Weak AI means building a machine that performs a specific task in an intelligent manner. So, for example, the beginning of each podcast in the Learning Machines 101 podcast series includes the following blurb…

“artificially intelligent systems recognize and produce speech, control prosthetic limbs, automatically recognize and sort pictures of family and friends, disarm terrorist bombs, perform medical diagnoses, aid travelers in navigation, detect banking fraud, defend your computer against hackers, suggest purchases on Amazon, deliver information using Google search, and automatically pilot vehicles on the ground, in the air, and in outer space”.

These are all examples of successful progress in the area of weak AI.

Progress in the area of Weak AI has advanced at an exponential rate since the early beginnings of the field of Artificial Intelligence in the early 1900s. Some critics of Weak AI have argued that only simple problems can be solved with weak AI and not the really hard problems which are the basis of true Artificial Intelligence. I strongly disagree with this assertion. Over the decades weak AI has successfully solved the problems classified as really hard thus forcing critics to identify even greater challenges for the field of artificial intelligence.

For example, in the late 1960s, some researchers who were overly-enthusiastic about the field of artificial intelligence felt that the development of machines that could understand spoken language were just around the corner, while other more pessimistic researchers argued that although computers could play championship checkers …computers would never be able to play championship chess. Decades later these challenges have been achieved. We have computer programs that play world-class chess and smart phones that can understand spoken language. As soon as these challenges for AI are achieved, however, it is easy to construct challenging problems in AI which can not be solved using our existing resources and technology. Episode 1 of this podcast series discusses these issues from the perspective that Artificial Intelligence is like a magic show. A behavior may appear to be very indicative of a true artificial intelligence in the sense of Strong AI but the “mystery” of the artificial intelligence is removed when the “secret” of the intelligence behavior is explained in explicit detail.

We are far from achieving strong AI but the past century has demonstrated tremendous progress in advancing the field of artificial intelligence. This is fine. In fact, I would even argue that the goal of Strong AI is should not be the goal of the field of Artificial Intelligence. The goal of the field of Artificial Intelligence is to continue to systematically approach and solve specific subproblems in the area of weak AI. Despite media hype, science doesn’t proceed by a scientist making a single discovery at a single moment in time. Theories must be formulated and checked. Data must be collected and analyzed. This takes years and possibly decades. Results must be disseminated and accepted. Similarly, even if one is interested in achieving the goal of Strong AI, actual scientific and engineering progress in this area must be measured in terms of Weak AI milestones.

From this perspective, the field of AI continues to advance in its understanding of AI in a variety of areas. And, as noted in the opening of each podcast in this podcast series, Artificial intelligence is now present in our everyday lives and plays important functional roles.

Further Reading:

Cole, David, “The Chinese Room Argument”, The Stanford Encyclopedia of Philosophy (Summer 2014 Edition), Edward N. Zalta (ed.), http://plato.stanford.edu/entries/chinese-room/

Dreyfus, H. L. (1999). What computers still can’t do: A critique of Artificial Reason.

MIT Press. Mass. http://www.amazon.com/What-Computers-Still-Cant Artificial/dp/0262540673/

Minsky, M. (1986). The Society of Mind. Simon and Schuster.

http://www.amazon.com/The-Society-Mind-Marvin-Minsky/dp/0671657135/

Searle, J. R. (1980). Minds, brains, and programs. Behavioral and Brain Sciences 3 (3): 417-457. http://cogprints.org/7150/1/10.1.1.83.5248.pdf

Turing, A. M. (1950). Computing machinery and intelligence. MINT: A quarterly review of psychology and philosophy, pp. 434-460. http://mind.oxfordjournals.org/content/LIX/236/433

Copyright Notice:

Copyright © 2014 by Richard M. Golden. All rights reserved.

Regarding the “embodied cognition” being a string objection to the Turing Test, what if we think of this learning machine programmed is such a way that is sees a very photorealistic virtual world, and interfaces with it with simulated human senses. It will be an virtual creature independent from the computing environment that generates the virtual world.