LM101-030: How to Improve Deep Learning Performance with Artificial Brain Damage (Dropout and Model Averaging)

Podcast: Play in new window | Download | Embed

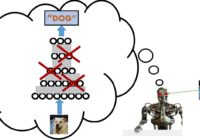

LM101-030: How to Improve Deep Learning Performance with Artificial Brain Damage (Dropout and Model Averaging) Episode Summary: Deep learning machine technology has rapidly developed over the past five years due in part to a variety of factors such as: better technology, convolutional net algorithms, rectified linear units, and a relatively new learning strategy called “dropout” in which hidden… Read More »