Podcast: Play in new window | Download | Embed

LM101-038: How to Model Knowledge Skill Growth Over Time using Bayesian Nets

Episode Summary:

In this episode, we examine the problem of developing an advanced artificially intelligent technology which is capable of tracking knowledge growth in students in real-time, representing the knowledge state of a student a skill profile, and automatically defining the concept of a skill without human intervention! The approach can be viewed as a sophisticated state-of-the-art extension of the Item Response Theory approach to Computerized Adaptive Testing Educational Technology described in Episode 37. Both tutorial notes and advanced implementational notes can be found in the show notes at: www.learningmachines101.com .

Show Notes:

Hello everyone! Welcome to the thirty-eighth podcast in the podcast series Learning Machines 101. In this series of podcasts my goal is to discuss important concepts of artificial intelligence and machine learning in hopefully an entertaining and educational manner.

In this episode, we examine the problem of developing an advanced artificially intelligent technology which is capable of tracking knowledge growth in students in real-time, representing the knowledge state of a student a skill profile, and automatically defining the concept of a skill without human intervention! The approach can be viewed as a sophisticated state-of-the-art extension of the Item Response Theory approach to Computerized Adaptive Testing Educational Technology described in Episode 37.

Typically, a student takes an exam and obtains a grade for the exam for the purposes of assessing the student’s knowledge in a particular knowledge domain. Although this seems like a fairly reasonable strategy at first glance. This widely used assessment procedure makes several assumptions which are probably not satisfied.

One commonly made assumption about knowledge assessment is that the student’s knowledge of a particular domain can be numerically represented. That is, if one has a collection of 20 students and the goal is to assess their ability to solve calculus problems, then it is possible to rearrange the students so that the first student has the greatest ability to solve calculus problems and the twentieth student has the least ability to solve calculus problems. It is clear that such a strategy is difficult or impossible to implement in practice. The ability to solve a calculus problem incorporates a variety of diverse skills such as: basic algebra skills, knowledge of trigonometry operations, arithmetic skills, the chain rule in calculus, useful calculus identities, specific definitions such as the definition of a continuous function, and specific theorems such as the Taylor’s series expansion theorem. Some students might have obtained deep mastery of some of these skills but not others. Thus, it seems that the initial fundamental assumption that a grade can be used to represent knowledge is intrinsically flawed. An improved representation of knowledge would be to assume that the knowledge of a student is multidimensional rather than unidimensional. Or, more specifically, we should represent the knowledge of a student as a long list of skills and assess the degree to which each skill in this list has been mastered.

Note that one might criticize this concept as placing old wine in a new wine bottle. A critic might argue that we just require an exam for each component skill required for solving calculus problems. However, if the critic then proposes to average the exam grades across all component skills, then the averaged multi-exam method again makes the inappropriate assumption that knowledge of a particular domain can be numerically represented. Alternatively, the critic might simply state that the knowledge of the student is represented as a “skill profile” which is the list of the exam grades associated with the various component skills. If this entire list of exam grades is taken as the knowledge state of the student then the critic has essentially accepted the idea that the knowledge state of the student should not be represented as a number but rather as a list of numbers. This list of numbers can be geometrically represented as a “skill profile” point in a multi-dimensional space where each axis of the space corresponds to a different skill.

But there are still several other problems associated with the concept of having an exam for each component skill that is required for solving a calculus problem. Suppose we decide we want to pursue this approach. Then we have to figure out what are the critical component skills required to solve a calculus problem. This is actually an important well recognized step in Educational Testing. But this step is also a costly step. It typically requires considerable amounts of both time and effort in order to identify the component skills of a complex task. This means that for many applications, the identification of the component skills of complex knowledge domains is not practically feasible. For example, should one include “arithmetic skills” as a component skill in solving calculus problems? Should one include “knowledge of the chain rule” as a component skill in solving calculus problems? Should one include knowledge of solving simple algebraic equations for unknown quantities or knowledge of trigonometry? The research required to investigate these issues could take years to accomplish.

And furthermore, even if all of the component skills of a complex knowledge domain could be correctly identified, then one faces the problem of creating an exam for each component skill. If the items on the exam only include that component skill and no other skills, then it is quite possible and even very plausible that although one might identify the skill profile of a student in a multi-dimensional space the resulting skill profile would be useless for assessing performance in the original complex knowledge domain. The exams would only assess the skills in isolation but it is fundamentally important to assess how effectively a student can utilize and integrate the skills in his or her skill profile to solve real problems in the knowledge domain of interest. For example, suppose that a student takes a variety of exams where each exam assesses a component skill required for solving a calculus problem. The exams might cover areas such as: chain rule, basic algebra, basic arithmetic, trigonometry. And suppose the student demonstrates mastery of all of these component skills by answering the questions correctly on these exams. It is still possible the student would be unable to solve a calculus problem because they would not know how to integrate these component skills. So somehow the component skills should ideally be assessed in the application of solving an actual calculus problem.

And finally, another fundamental assumption is that the student’s knowledge structures are static when it is clear that they are constantly changing and evolving in real-time. Suppose a student participates in an assessment activity. The skill profile assessed as a result of the assessment activity is not permanent but is likely to change on a monthly, weekly, daily, and even hourly basis. This temporal dimension of assessment is crucial for the development and evaluation of sophisticated knowledge assessment procedures. To make things more concrete, consider a human tutor interacting with a student. The human tutor is constantly assessing the knowledge profile of the student. As the student masters specific skills through interactions with the human tutor, the human tutor will focus his or her attention on other skills the student must master in order to become proficient in solving calculus problems.

Dr. Jose Gonzalez-Brenes and Dr. Jack Mostow at the Language Technologies Institute at Carnegie Mellon University have been developing a unified framework for simultaneously dealing with all of the problems and challenges which we have discussed so far. They refer to their approach as “Dynamic Cognitive Tracing Topical Hidden Markov Model”. The details of their approach can be found in the three papers in the show notes of this episode at: www.learningmachines101.com . I should comment that Dr. Gonzalez-Brenes is currently at the Pearson School Research Network in Philadelphia.

In their paper “Dynamic Cognitive Tracing: Towards Unified Discovery of Student and Cognitive Models”. Gonzalez-Brenes and Mostow provide the following example of an automated reading tutor which presents phrases to children one word at a time. The child is visually presented with a word and asked to read the word aloud. The child pronounces the word. Then the next word is visually presented and the child reads the next word aloud. The automated tutor compares the correct pronounciation of the word with the child’s pronounciation and then scores the child’s performance for each word as “correct” or “incorrect”. Correct pronounciation of a word requires a variety of component skills. For example, the child must have good visual perception skills, good spelling to sound correspondence skills including both regular and exception rules for pronounciation, knowledge of idiosyncratic word pronounciations, and the presence of both semantic and pragmatic knowledge can also facilitate performance.

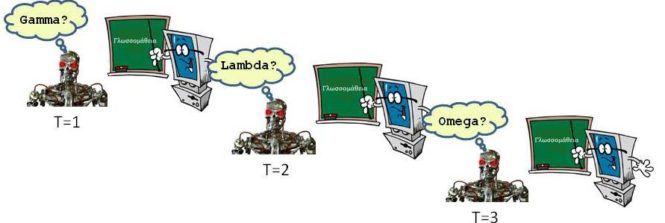

The Dynamic Cognitive Tracing model attempts to predict whether a child will correctly pronounce or incorrectly pronounce the next word in a sequence of words by automatically adjusting both student-specific parameters and item-specific parameters. Student-specific parameters are assumed to be time-varying so each time a word is pronounced, the student’s skill profile is estimated. Other student-specific parameters could be estimated to characterize how the student’s current skill profile will change at each time step. In addition, item-specific parameters are estimated which specify which combinations of skills are required to pronounce a word correctly.

In our Calculus Domain example, one could imagine a student interacting with a calculus tutor which presents one calculus problem at a time to the student. Just as in the case of a child pronouncing a word correctly each time a word is presented, in the calculus domain the student tries to solve a particular calculus problem each time a new problem is presented. Just as in the domain of reading, each calculus problem requires a unique combinations of skills and the skill profile of the student is assumed to change and evolve after each calculus problem is presented.

So now let’s discuss some of the technical details of the Dynamic Cognitive Tracing Hidden Markov Model. We will present a simplified discussion of this approach based upon the paper by Gonzalez-Brenes which was presented at the 2015 International Conference on Artificial Intelligence and Statistics. It is assumed that one begins with a fixed number of items. An item corresponds to a particular template for generating a question on an exam. It is assumed that the answer to an item is either “correct” or “incorrect”. For example, an item might be generated from a template which specifies a problem consisting of applying the Calculus chain rule to compute the derivative of a function of a function of a single variable. The responses to the problem might be multiple choice responses corresponding to different choices for the derivative. For each of a fixed number of items, it is assumed that there exists a probability distribution which specifies the probability that one of a fixed number of possible skills is relevant to the solution of the problem. Although technically this assumption means that only one skill is required to answer a particular item and the the percentage of times a skill S is required to answer the item for a particular item is equal to Pis. In spirit, however, the semantic intention of this assumption is that all skills are relevant to the solution of item I with the degree of relevance of skill S being defined by the probability Pis. This is a subtle semantic interpretation of the model but it is quite crucial for understanding the objective of the modeling process. It is based upon interpreting the probability distribution as a theory of belief as described in Episode 8 but estimating this belief distribution using frequentist concepts. In other words, given a particular item, the probability model assigns a degree of belief that a particular skill is used for that item. These degrees of belief are then estimated using frequentist assumptions.

Thus, the item-specific parameters associated with an item specify a probability distribution over the set of skills. These item-specific parameters are not functionally dependent upon the ability of a particular student but rather are common to all students in the student population. In addition, we have student-specific parameters which are different for each student. These student specific parameters specify the probability distribution the student has mastered skill S at time t given the student has mastered skill S at time t-1. It is assumed that there are different levels of mastery for each skill. For example, one might imagine there are three levels of mastery for a particular skill which are: novice, intermediate, and expert.

This means that the time-course of a student’s mastery of skills over time is represented by a collection of probability distributions which specify the probability of the skill profile of the student at time t given the skill profile of the student at time t-1. This is essentially a Markov chain as described in Episode 11 and Episode 21. Finally, the probability that the student answers an item correctly at time t is functionally dependent upon the current skill profile which indicates which indicates the knowledge level such as “novice” or “expert” for each skill as well as the likelihood that the particular skill is relevant for answering the item presented at the current time. This is called a Bayesian network and it is a generalization of the Markov model approach.

In a manner analogous to the simpler Item Response Theory approach described in Episode 37, we thus can estimate parameters of the model which are student-specific and and parameters of the model which are item-specific. This is extremely powerful for reasons which were discussed in Episode 37 and which will be briefly discussed here. Notice that the problem of inference and estimating the parameters of this model is non-trivial. If the parameters of the model are known, then a Gibbs sampling methodology such as described in Episode 21 may be used to make predictions regarding specific and average student response rates to items at particular points in knowledge development. A deterministic version of the Expectation-Maximization algorithm which was described in Episode 22 was used to estimate the parameters of the model. Specific details regarding the inference and parameter estimation algorithms are referenced in the original paper published at the Artificial Intelligence and Statistics conference.

The results of the study reported at the 2015 AI stats conference by Dr. Gonzalez-Brenes are now discussed. The model was evaluated on two data sets. The first data set had an item bank of 5,233 different items and 123 students and covered material for a first year Algebra I course. The second data set also covered the topic of Algebra I and included data from 205 students and an item bank of 3,081 questions. The data sets were split into a training data set and a test data set as described in Episode 12.

The set of results reported here is based upon using the Cognitive Dynamical Tracing Topical HMM which we will call the Topical HMM to predict student responses for the next item given the previous item. The Topical HMM model was trained on the training data and then tested on the test data as described in Episode 12. Discrimination performance rather than accuracy was used to evaluate performance. This means that the performance measure tries to simultaneously take into account not only the percentage of times the model predicts a correct response when that prediction is correct but also takes into account the percentage of times the model predicts a correct response when that prediction is wrong. Specifically, the dependent measure was the area under the ROC curve. A reference to this measure is provided in the show notes of this episode.

The Topical HMM Model was then compared to several competing methods of analysis. The Student Performance Model estimated the probability an item is answered at time t as the fraction of times it was answered correctly up to time t-1. The Expert Item to Skill Mapping Topical HMM is essentially the same as the Topical HMM Model but the item-specific parameters which specify the probability distribution of skills for a given item are chosen by domain knowledge experts. Those parameters are then fixed and not learned. As a control condition the Random Item to Skill Mapping Topical HMM was also created which uses item-specific parameters which specify the probability distribution of skills for a given item but those parameter values are randomly chosen. The results of the discrimination performance on the test data showed that the Topical HMM Model and Expert Item to Skill Mapping Topical HMM exhibited good discrimination performance while the performance of the Student Performance Model and the Random Item to Skill Mapping Topical HMM exhibited performance which was only slightly better than random guessing. This demonstrates that the Topical HMM Model is capable of learning skill categories without requiring the use of a domain knowledge expert and that these skill categories are important to predicting overall performance on the knowledge domain tasks. One disadvantage of the Topical HMM Model, however, was that it was not always easy to interpret the skill categories which the Topical HMM Model automatically created.

In summary, this is an example of a state-of-the-art approach to knowledge assessment which nicely contrasts the classical (and still very powerful) Item Response Theory approach to knowledge assessment discussed in Episode 37.

In future episodes of Learning Machines 101, we will talk about other state-of-the-art approaches to knowledge assessment such as: Automated Essay Grading Technology, Knowledge Space Theory. These more recent developments in educational technology represent important directions in Educational Technology Assessment which address fundamental limitations of Item Response Theory.

So thanks for your participation in today’s show! I greatly appreciate your support and interest in the show!! If you liked this show, then I would really appreciate it if you would share this show with your friends and colleagues via email, twitter, facebook, Linked-In, or any other way you typically communicate with your friends and colleagues.

Also I would appreciate a review in ITUNES. You can provide me with an ITUNES review by simply clicking on the button on the front page of the web page: https://www.learningmachines101.com which has a link to the ITUNES store. Or you can just go directly to the ITUNES store.

If you are a member of the Learning Machines 101 community, please update your user profile. If you look carefully you can provide specific information about your interests on the user profile when you register for learning machines 101 or when you receive the bi-monthly Learning Machines 101 email update!

You can update your user profile when you receive the email newsletter by simply clicking on the: “Let us know what you want to hear” link!

Or if you are not a member of the Learning Machines 101 community, when you join the community by visiting our website at: www.learningmachines101.com you will have the opportunity to update your user profile at that time.

Also check out the Statistical Machine Learning Forum on LinkedIn and Twitter at “lm101talk”.

From time to time, I will review the profiles of members of the Learning Machines 101 community and do my best to talk about topics of interest to the members of this group!

Further Reading:

(Gonzalez-Brenes and Mostow, 2012). Dynamic Cognitive Tracing: Towards Unified Discovery of Student and Cognitive Models. Educational Data Mining.

(Gonzalez-Brenes, 2015). Modeling Skill Acquisition over Time with Sequence and Topic Modeling. 2015 International Conference on Artificial Intelligence and Statistics.

Wikipedia Article on AUROC (Area under ROC Curve) Discrimination Measure.

Wikipedia Item Response Theory Article

International Association for Computerized and Adaptive Testing

Automated Essay Grading Technology,

Related Episodes of Learning Machines 101:

Episode 8: Using Probability to Represent Beliefs

Episode 12: Train and Test Data Sets

Episode 21: Gibbs Sampling and Monte Carlo Markov Chain

Episode 22: Expectation Maximization

Episode 37: Item Response Theory